There are few error messages as frustratingly vague and completely blocking as ‘data generation failed due to insufficient input’. It’s a full stop that halts progress, whether you’re a developer building an application, a data scientist training a model, or a researcher analyzing a dataset. This error signals a fundamental breakdown in your data pipeline, but its origins can be deceptively complex.

This article is the definitive multi-disciplinary guide to solving that problem. We bridge the gap between high-level data theory and practical, in-the-weeds troubleshooting to provide a single, holistic resource. Whether you’re wrestling with SQL queries, Python scripts, machine learning models, or research study design, this guide has a dedicated solution for you.

We will first diagnose the common root causes of this error. From there, we will dive into context-specific solutions for your field, and finally, we’ll establish a proactive framework to prevent these errors from ever happening again.

Diagnosing the root causes of ‘insufficient input’ errors

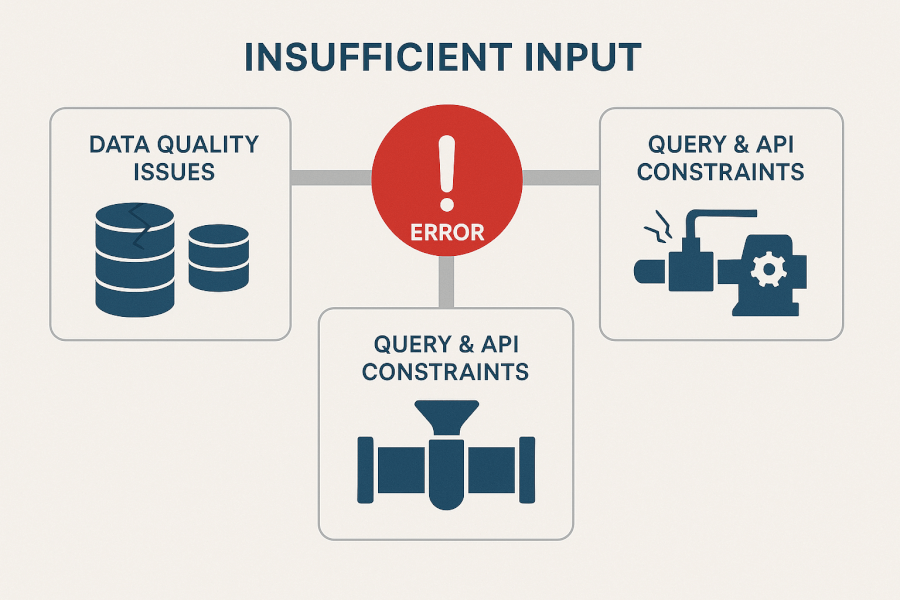

The ‘insufficient input’ error is a symptom, not the disease. The first and most critical step is always diagnosis. The problem rarely lies with the tool that throws the error, but rather with the data—or lack thereof—that was fed into it. The root causes generally fall into one of three categories.

Common cause 1: data quality and integrity issues

At the most basic level, the data you’re trying to process might technically exist but is functionally useless. This includes common data quality problems like nulls, blanks, or zero-value records. A table with a thousand rows where the key column is entirely empty is, for all practical purposes, ‘insufficient input’. Similarly, you may encounter incorrect data types or formatting, such as text strings in a field that should be purely numeric, which can cause processing steps to fail and produce an empty, insufficient output for the next stage.

Common cause 2: database or API constraints

Often, the data source itself is fine, but the way you’re asking for the data is the problem. Your query filters, JOINs, or API parameters can be too restrictive, resulting in an empty or incomplete dataset. A classic real-world example is a query with a date range filter that is too narrow—for instance, asking for sales data from a weekend when the business was closed. The query runs successfully but returns zero records, which becomes an ‘insufficient input’ error for the next process that expects sales data.

Common cause 3: flaws in the data generating process (DGP)

From a research and statistical perspective, the problem can be much further upstream in the data generating process (DGP). This refers to the real-world mechanism that creates the data in the first place. If there are fundamental flaws in this process, the resulting data will be inherently insufficient. Examples include a broken sensor on a piece of machinery that fails to log performance metrics, a poorly designed survey with confusing questions that leads to non-responses, or a data entry protocol that allows for incomplete records to be saved.

For developers: troubleshooting database and API input errors

For software and data engineers, this error often appears at the intersection of services, where one process hands off data to another. The solution lies in defensive coding and rigorous validation at every step.

SQL solution: validating query results and constraints

Before your application code even touches the data, you can validate it at the source. Instead of running a complex operation and hoping for the best, run a lightweight check first. A simple COUNT(*) on your query can tell you if you have zero rows before you attempt a more intensive data generation step.

In my experience, a common culprit is the misuse of JOIN types. An INNER JOIN will drop all records that don’t have a match in both tables, which can unexpectedly result in an empty set. Switching to a LEFT JOIN and then handling potential nulls in your application logic is often a more robust solution.

-- First, check if any records match the criteria

DECLARE @RecordCount INT;

SELECT @RecordCount = COUNT(*)

FROM Orders o

INNER JOIN Customers c ON o.CustomerID = c.CustomerID

WHERE o.OrderDate >= '2024-01-01' AND c.Country = 'USA';

-- Only proceed if records exist

IF @RecordCount > 0

BEGIN

-- Proceed with your main data generation logic...

SELECT o.*, c.CustomerName

FROM Orders o

INNER JOIN Customers c ON o.CustomerID = c.CustomerID

WHERE o.OrderDate >= '2024-01-01' AND c.Country = 'USA';

ENDPython/API solution: defensive coding and input validation

When receiving data from an API, never assume the payload will be what you expect. Always check the content and structure of the response before processing it. This means checking if a list is empty or if a dictionary is missing required keys. By adding assertions or raising explicit, informative exceptions, you can turn a vague ‘insufficient input’ error into a clear, actionable message.

import requests

def process_user_data(api_url, user_id):

"""

Fetches and processes user data from an API with validation.

"""

response = requests.get(f"{api_url}/users/{user_id}")

response.raise_for_status() # Raises an exception for bad status codes (4xx or 5xx)

data = response.json()

# Validate the received data before processing

if not data or not isinstance(data, list) or len(data) == 0:

raise ValueError(f"Insufficient input from API for user_id: {user_id}. Response was empty or invalid.")

# If validation passes, proceed with processing

for user_record in data:

print(f"Processing record for {user_record.get('name')}")

# Example usage:

# process_user_data("https://api.example.com", 123)For data scientists: overcoming data scarcity in ML models

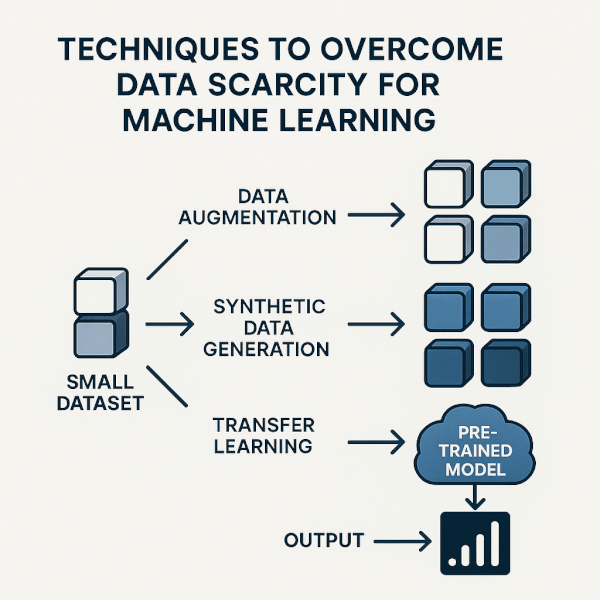

In machine learning, the ‘insufficient input’ problem manifests as a small dataset that is unable to train a robust or accurate model. When you don’t have enough data, your model can’t learn the underlying patterns, leading to poor performance. Fortunately, several powerful techniques can address this.

Technique 1: data augmentation

Data augmentation involves creating new training examples by making small, realistic modifications to your existing data. For images, this could mean flipping, rotating, cropping, or altering the brightness. For text, techniques include back-translation (translating to another language and back again) or synonym replacement. Libraries like albumentations for images or nlpaug for text can programmatically expand your dataset many times over.

Technique 2: synthetic data generation

When augmentation isn’t enough, you can turn to synthetic data generation—creating entirely new, artificial data points that share the statistical properties of your original data. A sophisticated method for this involves using Generative Adversarial Networks (GANs), where two neural networks compete to create realistic data. As detailed in a recent study, there are many advanced strategies for overcoming data scarcity, and synthetic generation is one of the most powerful.

Technique 3: transfer learning

Instead of training a model from scratch on your small dataset, transfer learning allows you to use a model that has already been trained on a massive dataset (like ImageNet or the entire Wikipedia). You can then use this pre-trained model as a feature extractor or fine-tune its final layers on your specific data. This approach, which is grounded in academic research on data scarcity, leverages the knowledge learned from the large dataset, dramatically reducing the amount of data you need for your task. Hubs like Hugging Face and TensorFlow Hub offer thousands of pre-trained models for this purpose.

| Technique | Best For | Complexity | Example Use Case |

|---|---|---|---|

| Data Augmentation | Image, Text, Audio Data | Low | Creating slightly varied images of a product for a classification model. |

| Synthetic Data | Tabular, Time-Series Data | High | Generating realistic but artificial customer transaction data for fraud detection. |

| Transfer Learning | Image, Text Classification | Medium | Using a Google-trained model to classify your specific document types. |

For researchers: ensuring sufficient input in study design

For academic and scientific researchers, the best way to fix an ‘insufficient input’ error is to prevent it from ever occurring. This means building robustness into the conceptual stage of your project, long before a single line of code is written.

The importance of power analysis

Before collecting any data, a statistical power analysis is essential. This analysis helps you determine the minimum required sample size to detect an effect of a given size at a desired level of significance. Running a power analysis prevents you from investing significant time and resources into a study only to find out later that your dataset was too small to draw any meaningful conclusions.

Designing robust data collection protocols

Your data collection protocol is your first line of defense against quality issues. This involves creating standardized data entry forms with clear validation rules (e.g., numeric fields that reject text, mandatory fields that cannot be left blank). Using controlled vocabularies or dropdown menus instead of free-text fields can drastically reduce errors and ensure the data is consistent and sufficient for analysis.

Pilot studies and data pre-validation

Never commit to a full-scale study without first running a small pilot study. A pilot test involves running your entire data pipeline—from collection and entry to cleaning and preliminary analysis—on a small subset of data. This invaluable process allows you to identify and fix potential ‘insufficient input’ issues, such as confusing survey questions or faulty data import scripts, before they compromise your entire research project.

Key diagnostic checklist

Symptom Potential Cause Area First Action to Take A SQL query returns zero rows Database / Query Logic Check your WHEREclauses andJOINtypes.An API call returns an empty list []Developer / Code Logic Implement a check for the length of the returned data. ML model accuracy is very low Data Science / Scarcity Evaluate data augmentation or transfer learning. Final dataset is smaller than expected Research / Methodology Review data collection protocols and initial sample size.

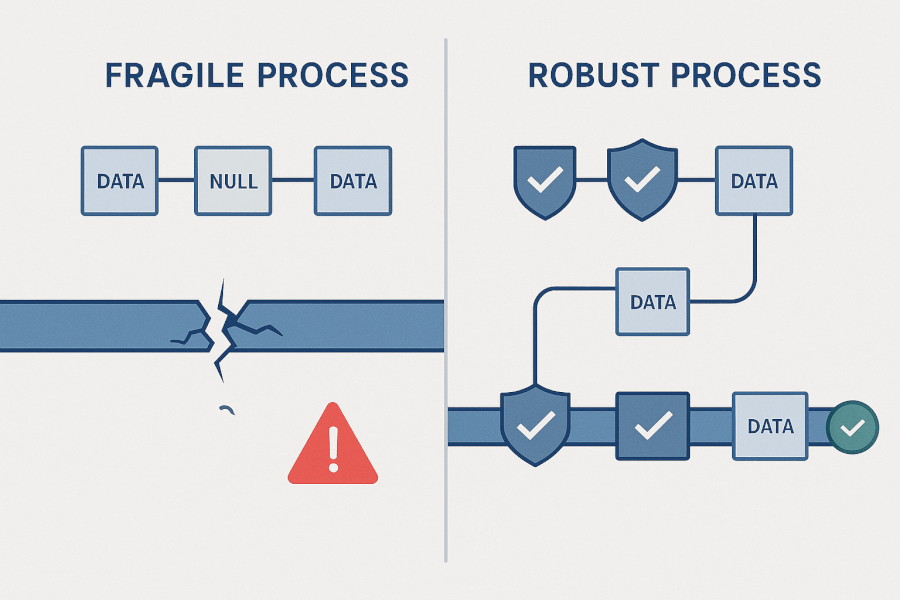

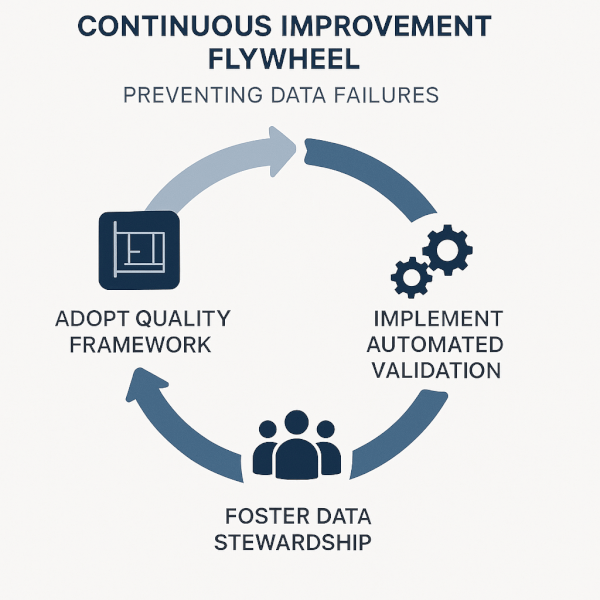

A proactive framework for preventing data generation failures

Fixing errors is a reactive process. The true goal is to build resilient systems where these errors are rare. This requires shifting your mindset from ad-hoc fixes to a proactive, structured approach to data quality.

Adopt a data quality framework

Instead of treating data quality as an afterthought, adopt a formal framework to manage it. The principles of a strong data quality framework are well-established and focus on key dimensions like accuracy, completeness, timeliness, and consistency. Citing the official framework for data quality used by federal statistical agencies or the UN Data Quality Assurance Framework, you can build a system that ensures data is fit for purpose from the very beginning.

Implement automated data validation pipelines

Modern data stacks allow for data quality checks to be automated and embedded directly into your pipeline. Tools and libraries like Great Expectations for Python can programmatically profile your data at each step. You can define assertions such as “this column can never be null” or “values in this column must be between 1 and 100.” If the data violates these rules, the pipeline can be stopped automatically, preventing insufficient data from corrupting downstream processes.

Foster a culture of data stewardship

Ultimately, data quality is a human challenge. Fostering a culture of data stewardship involves assigning clear ownership and responsibility for specific data domains to individuals or teams. When people feel accountable for the quality of the data they produce and manage, they are more likely to follow best practices and proactively identify issues before they become blocking errors for someone else.

Frequently asked questions

What is the most common cause of ‘insufficient input’ errors?

The most common cause is unexpected empty or null data returned from a preceding step, such as a database query or API call that found no matching records.

This is often due to overly restrictive filters, incorrect JOIN logic in SQL, or flaws in the initial data collection that result in a valid but empty dataset. The error occurs when a subsequent process tries to operate on this empty set, expecting data to be present.

How can I generate more data for my machine learning model?

You can generate more data through techniques like data augmentation (modifying existing data), synthetic data generation (creating new data with GANs), or by using transfer learning with pre-trained models.

The best method depends on your data type; augmentation works well for images, while transfer learning is highly effective for both text and image tasks when you have a small dataset. Synthetic data is a more complex but powerful option for tabular data.

Is ‘insufficient input’ a database error or a code error?

It can be both. The error message itself often originates in your code when it tries to process an empty result, but the root cause is frequently in the database query that failed to return any data.

A good troubleshooting process involves checking the output of each step in your data pipeline to pinpoint whether the data was lost in the query (database side) or handled incorrectly in the application (code side).

From reactive fixes to resilient design

The ‘data generation failed due to insufficient input’ error is more than a simple bug; it’s a sign that a foundational assumption about your data has been violated. As we’ve seen, this error can be solved across all disciplines by moving from ad-hoc fixes to a structured approach of diagnosis, context-specific solutions, and proactive prevention.

This guide has provided a unified framework that empowers developers to write defensive code, data scientists to overcome data scarcity, and researchers to design robust studies. By adopting these principles, you can build more resilient, reliable, and powerful data systems.

Start today by adopting a proactive data quality mindset in your next project. To help you begin, consider downloading our companion ‘Data Quality Checklist’ to build these best practices into your workflow from day one.