The transition of large language models (llms) from experimental pilot projects to mission-critical, production-ready systems is the defining challenge for today’s chief technology officers (ctos) and vice presidents of data science. While the promise of llm-driven natural language querying (nlq) is compelling, the real, transformative value lies in automating the complex, technical work that currently consumes data teams.

The industry standard remains a painful one: data engineers and scientists still spend up to 80% of their time on manual data cleaning, transformation, and feature engineering—commonly referred to as data wrangling. This manual time sink is the primary bottleneck preventing enterprises from maximizing the value of their vast, often unstructured, data lakes.

The core solution is a definitive shift toward scalable, auditable llm agents that can execute advanced data analysis and automated feature engineering. This requires a new architecture and a rigorous governance layer. This article provides the technical playbook focused on solving the two major pain points preventing production deployment: code hallucination and robust llm data governance.

We detail how to build trustworthy systems by leveraging the DataForge AI orchestration framework, ensuring your pipelines are not just fast, but auditable, secure, and predictable.

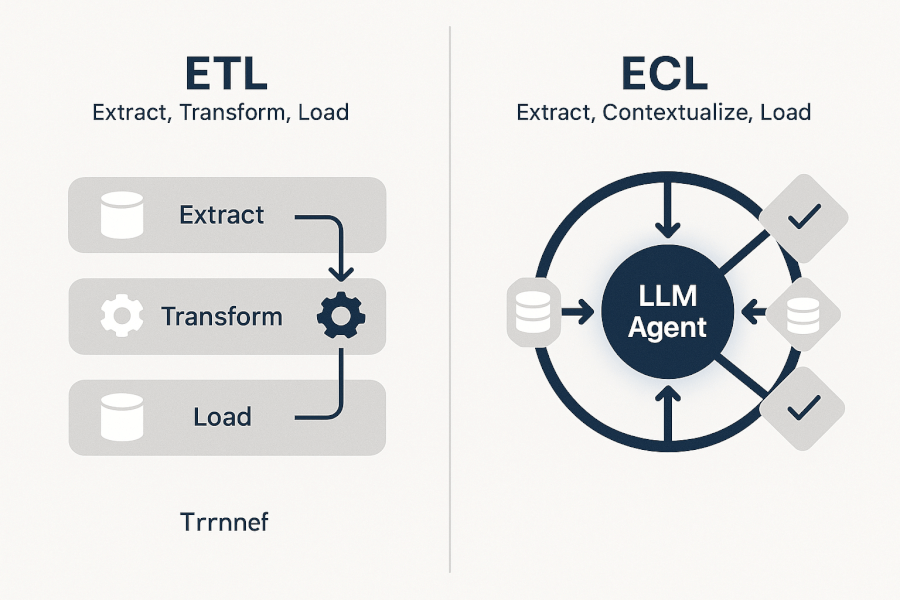

The llm-driven paradigm shift: from etl/wrangling to ecl pipelines

The traditional extract, transform, load (etl) paradigm, built for static, structured data sources, is fundamentally inefficient when faced with the volume, velocity, and semantic complexity of modern enterprise data.

The obsolescence of manual data transformation and feature engineering

When dealing with massive, unstructured data lakes, traditional etl processes become bottlenecks. Data engineers spend days writing custom, brittle scripts simply to parse, normalize, and reconcile disparate data formats. This manual process is not only a massive drain on resources, but it also misses opportunities to leverage semantic context. Feature engineering—the process of creating new variables from raw data to improve model performance—is slow, expensive, and often relies on tribal knowledge.

The need to reduce data wrangling time is paramount. Recent academic study on automated data wrangling with llms from the ieee demonstrates the potential for significant efficiency gains by allowing llms to semantically understand and structure data automatically, reversing the 80/20 rule.

Defining the extract-contextualize-load (ecl) framework

We propose the extract-contextualize-load (ecl) framework as the modern replacement for etl in llm-driven pipelines. Ecl places the llm agent at the center of the transformation process, ensuring data is not just moved, but intelligently enriched and verified.

The ecl process works as follows:

- Extract: Semantic data extraction is performed across complex sources. Llms analyze unstructured data (e.g., pdfs, emails, logs) to identify and extract key entities and relationships.

- Contextualize: LLM agents enrich and structure the extracted data. They apply semantic understanding, normalize formats, fill missing values based on context, and perform automated feature engineering before the data is ever loaded.

- Load: Structured, verifiable data is loaded into the data warehouse or vector store, complete with necessary metadata for auditability.

Automated data preparation use cases for the enterprise

The power of ecl lies in its ability to automate complex, high-value tasks:

- Semantic parsing of complex business documents, such as automatically extracting key terms and financial figures from contracts, regulatory filings, or internal financial statements.

- Automated pii masking and normalization layers, ensuring sensitive customer or corporate data is protected before processing.

- Creation of synthetic training data for new machine learning models, accelerated by the llm’s ability to understand the statistical properties and constraints of real-world data.

Building trustworthy systems: mitigating hallucination in llm-generated data science code

The most critical barrier to deploying llm agents in production is the risk of hallucination. While factual hallucination (making up an answer) is a known challenge, the far greater and more mission-critical risk for technical leaders is llm hallucination in code generation.

The mission-critical risk of llm code hallucination

When an llm generates incorrect or insecure code—be it sql for a financial reporting pipeline or python for a data transformation script—the consequences can be catastrophic. An incorrect sql join could corrupt an entire quarterly financial report, leading to compliance failures and major business errors. An improperly generated python script could introduce security vulnerabilities or silently discard critical data points. This risk is why most enterprises hesitate to fully trust llm for etl operations.

Technical mitigation strategies beyond basic rag

To build truly trustworthy systems, relying solely on basic retrieval-augmented generation (rag) for code generation is insufficient. Advanced, multi-layered strategies are required to outperform competitors whose solutions stop at simple rag.

Advanced mitigation techniques include:

- Pre-generation guardrails: Using strict semantic parsing and constrained function calling to limit the scope of the llm’s output to pre-approved data schemas and function libraries.

- Post-generation validation: This is the crucial step. We recommend detailing the concept of functional clustering, a technique referenced in mit research, for programmatically verifying code logic before execution. This means the system runs the code through a series of tests or checks against a constrained environment to confirm its functional correctness and security profile.

- External verification: Citing the acm digital library paper on research on llm code generation hallucination mitigation, the need for external, domain-specific validation systems is clear, moving beyond the llm’s own self-correction capabilities.

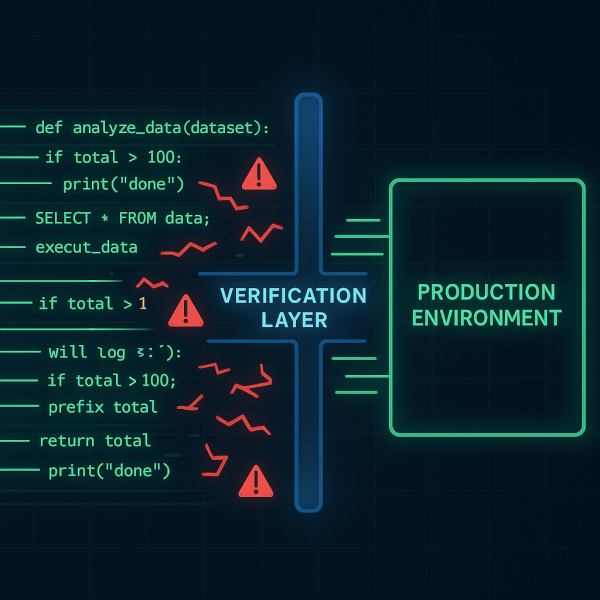

The DataForge AI verification layer and deterministic llm-etl

DataForge AI addresses the code hallucination risk directly with its proprietary Verification Layer. This layer operates as a deterministic, multi-model consensus system:

- The llm agent generates the required data science code (e.g., a python transformation script).

- The Verification Layer executes functional checks, schema validation, and security analysis.

- The system uses a separate, constrained validation model to confirm the generated code’s intended output against a small subset of the source data.

This process ensures auditability and predictable results for all llm for etl operations, effectively eliminating the risk of executing hallucinated code in production.

Model selection strategy: balancing advanced reasoning with cost and sovereignty

The choice of the underlying llm is a strategic decision for data leaders, balancing the need for advanced reasoning (often associated with proprietary models like gpt-4) against the critical factors of cost, latency, and data sovereignty.

Llama 3 vs. gpt-4: a quantitative analysis for data analysis benchmarks

Senior data leaders require a quantitative, cost-focused comparison that moves beyond anecdotal performance. For complex data analysis benchmarks, model performance must be weighed against operational expenditure.

| Model | Code Generation Accuracy (Target) | Complex Reasoning Score | Context Window (Tokens) | Cost (Est. per 1M Tokens) |

|---|---|---|---|---|

| GPT-4o | High (95%+) | Excellent | 128k | Medium-High |

| Llama 3 (70B) | High (90%+) | Very Good | 8k/70k (Fine-tuned) | Low |

| Mixtral 8x22B | Good (85%+) | Good | 64k | Medium-Low |

While gpt-4o offers the highest raw code generation accuracy and complex reasoning, its cost per token and reliance on a third-party api can be prohibitive for high-volume, repetitive data transformation tasks that define llm data analysis.

The case for open-source llms for etl and data sovereignty

For organizations dealing with highly regulated or sensitive data, the pain point of ‘reliance on cloud llms’ is a major blocker. This is where open-source models, such as Llama 3, become strategically vital. When deployed in an on-premise llm deployment or private cloud, these models offer:

- Data sovereignty: Ensuring data never leaves the controlled, compliant environment.

- Cost control: Eliminating per-token pricing in favor of fixed infrastructure costs.

- Customization: Allowing for deep fine-tuning for domain-specific tasks without vendor lock-in.

For high-volume, security-critical llm for etl operations, an open-source model governed by a rigorous enterprise architecture is often the superior choice.

Achieving domain-specific accuracy through rag grounding

For enterprise data analysis, achieving domain-specific accuracy rarely requires expensive, time-consuming full model fine-tuning. Instead, robust rag grounding provides superior results with a lower operational cost.

The strategy involves anchoring the llm’s knowledge base not just in general information, but in proprietary data schemas, business glossary definitions, and internal knowledge bases. This ensures that when the llm generates sql or python, it is grounded in the company’s specific truth, preventing the model from relying on general, and potentially incorrect, public knowledge.

Architecture for advanced analysis: designing production-grade rag pipelines and multi-agent systems

Moving beyond basic llm prompts requires a sophisticated, production-grade architecture built around operationalizing rag and orchestrating complex multi-step workflows.

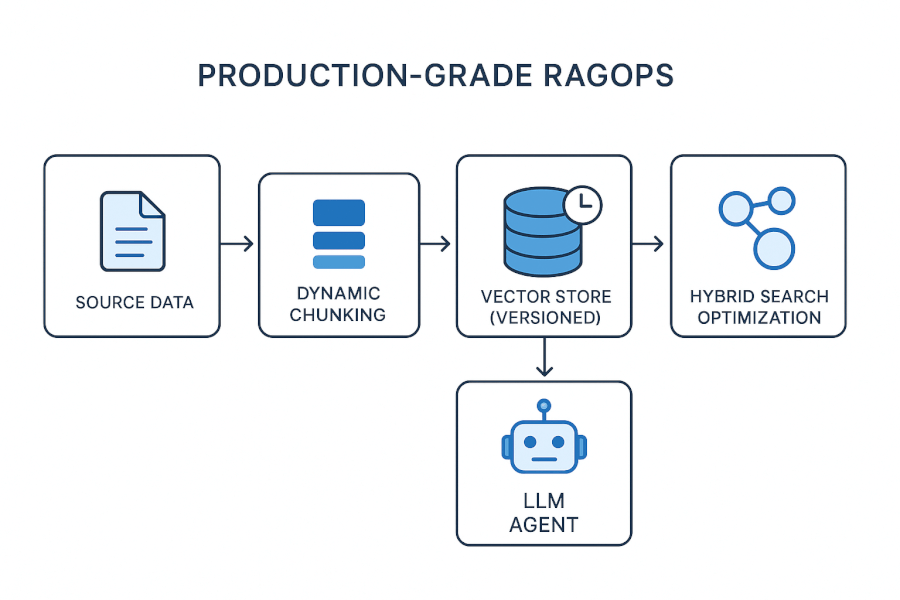

Blueprint for production-grade rag pipelines (ragops)

RAGOps is the operational framework for managing rag in production. For enterprise architects, this means treating the vector store and its related infrastructure as a core, mission-critical component of the data stack. The framework for production-grade RAGOps and lifecycle management, detailed in relevant literature, outlines key components:

- Data versioning: Establishing protocols to version both the source data and the generated vector embeddings.

- Dynamic chunking: Implementing dynamic chunking strategies based on metadata and semantic context, rather than fixed lengths, to improve retrieval quality for complex data.

- Hybrid search optimization: Combining vector search (semantic similarity) with traditional keyword search to maximize recall and precision.

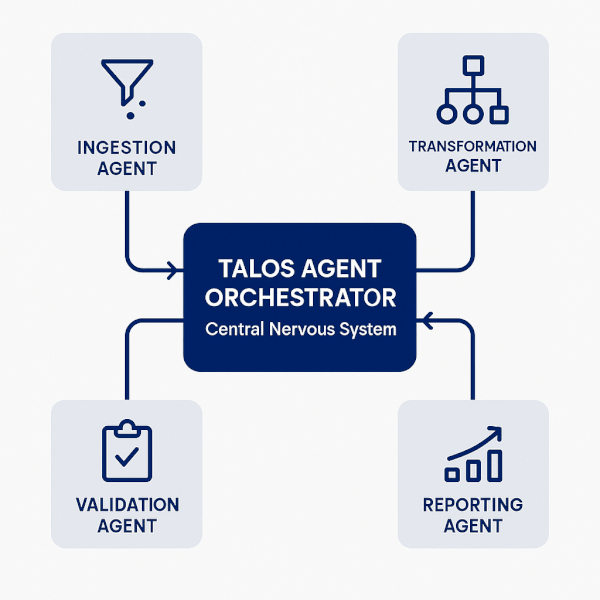

Orchestrating agent swarms for complex analytical workflows

Advanced data analysis—such as a complex financial forecasting model requiring data ingestion, cleaning, feature creation, modeling, and reporting—cannot be handled by a single prompt. It requires a multi-agent system, or “agent swarm,” where specialized llms handle specific tasks.

DataForge AI’s proprietary Talos Agent Orchestrator acts as the central nervous system managing these complex workflows. It deploys specialized agents:

- Ingestion agent: Handles the initial semantic parsing (ecl extraction).

- Transformation agent: Executes the verified python/sql code (ecl contextualization).

- Validation agent: Runs the code through the Verification Layer.

- Reporting agent: Synthesizes the final analysis into a human-readable report.

This orchestration mitigates the risk of single-point failure and ensures each step is handled by a specialized, constrained llm, increasing reliability and auditability.

Visualizing the enterprise llm data analysis reference architecture

A high-level view of the recommended production architecture illustrates the governed flow:

This structure places the Verification Layer and the Talos Orchestrator as non-negotiable governance checkpoints, ensuring all data transformation is traceable and correct.

Enterprise governance: secure on-premise deployment and compliance-as-code

For the enterprise, speed and efficiency cannot come at the expense of security and compliance. Robust on-premise llm data governance is essential for managing sensitive data and adhering to regulatory requirements.

Technical requirements for secure llm deployment with sensitive data

Deploying llms securely, whether on-premise or in a private cloud environment, requires specific infrastructure controls:

- Network isolation: The llm and its associated vector store must operate in a strictly isolated network segment, air-gapped from the public internet.

- Pii masking layers: Automated pii masking and de-identification must occur at the ingress layer before data is ever presented to the llm, regardless of the model’s location.

- Access control (rbac): Role-based access control (rbac) must be implemented not just for the data, but for the specific llm agents and the functions they are authorized to call.

Implementing auditability and data lineage in llm pipelines

Responsible ai demands a complete audit trail for every action taken by an llm agent. This is crucial for debugging, compliance, and providing data lineage. An auditable system must log:

- The exact user prompt.

- The raw llm response (the generated code).

- The verification layer’s decision (pass/fail and reason).

- The final executed code and the resulting transformed dataset.

This granular logging ensures clear data lineage for every llm-transformed dataset, allowing data leaders to trace any output back to its original source and transformation logic.

Compliance-as-code (gaac) and the nist risk framework

To automate compliance checks, governance-as-code (gaac) principles should be applied. GaaC embeds compliance checks directly into the continuous integration/continuous delivery (ci/cd) pipeline.

This means that any new llm agent or pipeline update is automatically tested against pre-defined security and compliance policies (e.g., pii handling, data retention rules) before it is deployed. This strategy is fully aligned with the authoritative standard provided by the NIST AI Risk Management Framework Generative AI Profile, which provides guidelines for managing risks associated with generative ai systems.

The enterprise llm data playbook: three immediate action items for ctos

| Action | Why It Matters |

|---|---|

| Prioritize verification | Immediately implement technical guardrails like DataForge AI’s Verification Layer to eliminate code hallucination risk before any LLM code is executed. |

| Shift to ecl | Reallocate data engineering resources to pilot the Extract-Contextualize-Load (ECL) process, aiming to reverse the 80/20 data wrangling rule. |

| Formalize ragops | Adopt the RAGOps framework to manage your RAG pipelines in production, ensuring data versioning and refresh protocols meet enterprise standards. |

Frequently asked questions about enterprise llm data analysis

How accurate are llms for sql generation in a governed environment?

Accuracy is highly variable for raw llms, but a properly implemented verification layer can push functional accuracy to near-deterministic levels. Without governance, raw llms often fail on complex enterprise schemas. Factors affecting accuracy include the model size, the quality of rag grounding on enterprise schemas, and the stringency of the pre- and post-generation guardrails.

What is rag in data analysis and why is it critical for the enterprise?

RAG (retrieval-augmented generation) in data analysis is an architectural pattern that grounds an llm’s output in verifiable, proprietary enterprise data, preventing factual hallucination. It is critical because it ensures the llm generates answers and code based on the domain-specific truth of the company’s data assets, rather than its general training data.

Best practices for mitigating llm hallucination in production data pipelines

The best practice is a multi-layered verification system that includes: pre-generation prompt engineering with strict schema constraints, functional code validation in a sandbox environment, and a deterministic post-generation check (like DataForge AI’s Verification Layer). This moves the system from probabilistic to auditable.

Conclusion: the enterprise data architect as orchestrator

The future of advanced data analysis is one where llm agents handle the heavy lifting of data preparation and feature engineering, but this future is only viable if it is governed. Data leaders must transition from focusing on simple llm usage to orchestrating secure, auditable pipelines.

By adopting the ECL paradigm, implementing a Verification Layer to eliminate code hallucination, and formalizing architecture under RAGOps, the enterprise can drive substantial efficiency without compromising security or compliance. DataForge AI‘s framework empowers data leaders to be the orchestrators of this new, governed data economy.

Ready to deploy governed llm data pipelines that eliminate code hallucination? Download the DataForge AI Enterprise RAGOps blueprint to map your production architecture and implement these strategies today.